Responsible AI Development: A Framework for Governance, Safety, and Ethics - The significance, differences, and interconnectedness of each

I. Introduction:

Context: Artificial intelligence (AI) is undergoing rapid development and evolution, impacting every facet of modern society.1 From healthcare and finance to transportation, employment, government services, and creative industries, AI systems are displaying increasingly sophisticated capabilities.2 These advancements promise significant benefits, including enhanced efficiency, productivity, scientific breakthroughs, and solutions to complex challenges.1 Though, the increasing power and autonomy of AI has also introduced risks and ethical considerations that demand careful evaluation.1

The speed of AI's evolution presents a inherent tension. The rapid pace of development and deployment 5 calls for immediate attention to establish guardrails against potential harms.1 Yet, This same speed makes the task of creating comprehensive, future-proof, and harmonized frameworks for governance, safety, and ethics difficult.5 Regulations struggle to keep up with technological advancements 5, and establishing international consensus on standards for a technology that evolves quickly and operates globally posing significant hurdles.31 This challenge advocates that effective oversight cannot rely on static, one-time solutions. Instead, it demands agile, adaptive, and iterative approaches capable of evolving alongside AI itself.

Problem Statement: The discourse surrounding responsible AI includes three critical concepts – AI Governance, AI Safety, and AI Ethics that are frequently used, yet often used without clear distinction. This ambiguity hinders effective communication, policy development, and implementation, leading to gaps in oversight and unaddressed risks.36 At the same time, public awareness and concerns of potential negative impact of AI – algorithmic bias, privacy violations, threats to safety, job displacement, and manipulation – are growing.4 This apprehension underlines the demand for transparent, accountable, and ethically grounded approaches to AI development and deployment.

Paper Objectives: This paper seeks to bring clarity to these domains. It aims to:

Provide clear definitions of AI Governance, AI Safety, and AI Ethics, in references from recognized sources such as the Organisation for Economic Co-operation and Development (OECD), the U.S. National Institute of Standards and Technology (NIST), and the United Nations Educational, Scientific and Cultural Organization (UNESCO).

Evaluate the goals, scopes, and principles of each of the concepts, analyzing differences, overlaps, and boundaries.

Examine the roles each of the three play across various stages of the AI lifecycle, from planning and design to deployment and ongoing operation.

Determine the responsibilities of key stakeholders – governments, developers, corporations, organizational adopters, and end-users – under each framework.

Offer recommendations derived from established guidelines and best practices for how these stakeholders could approach and implement governance, safety, and ethical considerations.

Explore future evolutions in these fields, emerging challenges, anticipated trends, and potential advancements in regulatory and technical approaches.

II. Defining the Three Pillars: AI Governance, Safety, and Ethics

An understanding of AI Governance, AI Safety, and AI Ethics requisites clear definitions and a description of their core tenets, as established by international bodies and research institutions.

A. AI Governance

Definition: AI Governance refers to the system and structures, processes, standards, policies, regulations, and guardrails established to direct, manage, and control the lifecycle of AI systems.25 Its purpose is to ensure that AI development and deployment align with organizational objectives, legal requirements, ethical principles, and societal values.25 A key function of AI governance is to proactively manage the inherent risks associated with AI, such as algorithmic bias, privacy infringements, security vulnerabilities, and misuse, while simultaneously fostering an ecosystem for responsible innovation and building public trust.25 It addresses the shortfalls that can arise from the human element in AI creation and maintenance.25

Core Principles: A combination of principles from leading frameworks like OECD, NIST, UNESCO, and others suggests a set of tenets for responsible AI governance:

Accountability: Organizations and individuals in the AI lifecycle ("AI actors") are responsible for the acceptable functioning of AI systems and their impacts, comparable with their roles and the context.19 This requires mechanisms for traceability (e.g., regarding datasets, processes, decisions) and human oversight to enable evaluation and solutions to inquiries or adverse outcomes.54

Transparency & Explainability: There must be comprehensibility to how AI systems operate, the data they use, and the logic behind their decisions and outputs.11 This creates understanding, allows stakeholders to be aware of interactions with AI, enables individuals to understand and challenge outputs, and build trust.14

Fairness & Non-Discrimination: AI systems must be designed and deployed to avoid unfair bias, prevent discrimination based on characteristics like race, gender, age, or other attributes.4 This requires bias mitigation strategies, the use of bias-free data, and regular auditing.29

Risk Management: An approach to identifying, assessing, measuring, prioritizing, and mitigating potential risks associated with AI systems throughout their lifecycle is necessary.1 This includes risks like safety, security, privacy, bias, and human rights.54

Human Rights & Democratic Values: AI systems should adhere to the law, human rights (including freedom, dignity, autonomy), democratic values, fairness, social justice, and internationally recognized labor rights.19 This mandates human-centered approaches and safeguards such as human agency and oversight.19

Safety, Security & Robustness: AI systems should function appropriately, reliably, and securely, even under adverse conditions, without posing unreasonable safety or security risks.19 Mechanisms to override, repair, or safe decommissioning are impoertant.19

Privacy & Data Protection: Personal data should be protected throughout the AI lifecycle in accordance with laws and standards, respecting individuals' rights to privacy and control over data.11

Key Frameworks: Several influential frameworks provide structure and guidance for AI governance:

OECD AI Principles: Adopted in 2019 and updated in 2024, this represent the first intergovernmental standard on AI.19 It promotes innovative and trustworthy AI that respects human rights and democratic values, offering five values-based principles (inclusive growth, human rights/values, transparency, robustness/security/safety, accountability) and five recommendations for policymakers.54 The principles and the OECD definition of an AI system are widely used in multiple international regulatory efforts.1

NIST AI Risk Management Framework (AI RMF): Developed by the U.S. National Institute of Standards and Technology, this framework provides an approach for organizations to manage AI risks and build trustworthy AI systems.1 It is built on four core functions: Govern (establish risk culture), Map (contextualize risks), Measure (assess and monitor risks), and Manage (treat risks).1 It focuses on the socio-technical nature of AI risks 1 and is designed for application across the entire AI lifecycle.1

EU AI Act: A landmark regulation establishing a risk-based legal framework for AI in the European Union.5 It segregates AI systems based on risk (unacceptable, high, limited, minimal) and imposes obligations, stringent requirements for high-risk systems.5 The AI Act aims to ensure safety, protect fundamental rights, and foster trustworthy AI development and use.47 It includes specific provisions for general-purpose AI (GPAI) models, deemed to pose systemic risks.46

UNESCO Recommendation on the Ethics of AI: This is the first global standard-setting which focused specifically on AI ethics.47 It provides a comprehensive framework based on four core values (human rights/dignity, peaceful societies, diversity/inclusion, environmental flourishing) and ten principles (including proportionality, safety/security, fairness, sustainability, privacy, human oversight, transparency/explainability, responsibility/accountability, awareness/literacy, and multi-stakeholder governance).60

Other Frameworks: Additional influential guidelines include the U.S. Blueprint for an AI Bill of Rights 38, various ISO standards relevant to AI management and risk (e.g., ISO/IEC 42001 for AI Management Systems, ISO 26000 for Social Responsibility) 1, and the IEEE's Ethically Aligned Design initiative.50

Levels of Governance (Organizational): Organizations adopt governance approaches with varying degrees:

Informal Governance: Relies on organizational values and principles, with informal review boards, but lacks structured processes.25

Ad hoc Governance: Develops specific policies in response to challenges or risks, lacking comprehensiveness.25

Formal Governance: Implements a comprehensive, systematic framework aligned with values, laws, and regulations, incorporating processes like risk assessment, ethical review, and formal oversight mechanisms.25

B. AI Safety

Definition: AI Safety is an interdisciplinary field dedicated to preventing misuse, and other harmful outcomes, including catastrophic or existential risks, arising from AI systems.94 It includes technical approaches like AI alignment and machine ethics with sociotechnical considerations.94 The goal is to ensure that AI systems operate reliably, securely, and in ways that are beneficial to humanity, and remain under human control.94 This aligns closely with governance principles emphasizing robustness, security, and safety throughout the AI lifecycle.19

Core Principles/Concepts:

AI Alignment: Alignment is ensuring that goals and behaviors of AI systems' conform to human objectives, preferences, and ethical principles.23 This includes both "outer alignment" (correctly specifying the intended purpose) and "inner alignment" (ensuring the AI robustly adopts that specification and doesn't develop unintended internal goals).103 It remains an open research problem, particularly for highly capable future systems.103

Harm Prevention: AI safety focuses on proactively identifying and mitigating various harm. This includes misuse, deliberately use AI for harmful purposes (e.g., disinformation, cyberattacks) 23; accidents or mistakes, where AI systems cause unintended negative outcomes due to errors, unreliability, or unforeseen interactions 23; and misalignment, where AI systems follow goals different from preset intentions, potentially leading to harmful outcomes even without malicious intent.23 Preventing harms like bias or privacy violations is also intrinsicalcal.25

Robustness & Reliability: AI systems must function correctly, consistently, and predictably, even when facing bad data, unexpected inputs, or adversarial attempts to cause failure.19

Security: Protecting AI systems, their algorithmic models and data, from threats such as unauthorized access, manipulation (e.g., data poisoning, adversarial examples), theft of intellectual property (model stealing), or disruption of service.19

Control & Oversight: Ensuring effective monitoring, guiding, and, intervention in the operation of AI systems.19 This includes designing systems with mechanisms for override, safe shutdown, or repair.19 Developing scalable oversight techniques, where humans can effectively supervise systems much faster or more complex than themselves.23

Testing & Evaluation (Red Teaming): Employing methods to proactively discover vulnerabilities, biases, potential failure modes, and alignment challenges before and during deployment.26 Red teaming specifically involves simulating attacks or probing for weaknesses to test and identify safety gaps.26

Key Research Areas/Approaches: AI safety research spans multiple domains:

Technical AI Safety: Focuses on developing algorithms and techniques for alignment (e.g., Reinforcement Learning from Human Feedback - RLHF 97), interpretability (understanding model internals), robustness verification, and formal methods.23

AI Safety Governance: Investigates policies, standards, norms, and international cooperation mechanisms needed to manage AI risks at global levels.94

Sociotechnical Safety: Technical safety with an understanding of social contexts, incentive structures, power dynamics, and human-computer interaction to address risks emerging from the interplay of technology and society.1

Frameworks: Frameworks developed by leading labs, such as OpenAI's Preparedness Framework 99 and Google DeepMind's Frontier Safety Framework 102, involving structured evaluations and risk mitigation protocols.

Local vs. Global Solutions: Local safety solutions, focuses on making individual AI systems safe, and global solutions implement safety measures consistently across various AI systems and jurisdictions.94 Effectively managing the risks of advanced AI necessitates scaling local measures globally through international cooperation and harmonized governance.94

C. AI Ethics

Definition: AI Ethics is a field of applied ethics, within ethics of technology, that examines the moral issues growing from the design, development, deployment, and use of artificial intelligence.2 It involves the application of widely accepted standards of right and wrong to guide moral conduct in AI-related activities.36 The aim is to ensure that AI technologies respect human values, uphold fundamental rights, promote fairness and well-being, and align with societal norms.10

Core Principles: AI ethics emphasizes the normative dimension. Key ethical principles include:

Fairness & Justice: Preventing algorithmic bias and discrimination, ensuring equitable treatment, access, and outcomes for all individuals and groups.2

Accountability & Responsibility: Establishing who is morally and legally responsible for the actions and consequences of AI systems, particularly when harm occurs.10 Addressing the potential "responsibility gap" created by autonomous systems.10

Transparency & Explainability: Addressing the "black box" problem by making the decision-making processes understandable by humans, enabling scrutiny, trust, and recourse.10

Human Autonomy & Dignity: Ensuring AI systems respect and enhance, rather than undermine, human freedom, self-determination, and dignity.10 including preventing undue manipulation or coercion.69

Privacy & Data Protection: Upholding the right to privacy and ensuring the right use, storage, and sharing of personal data by AI systems.10

Beneficence & Non-Maleficence: Designing and using AI to promote well-being and avoid causing harm ("do good" and "do no harm").4

Philosophical Underpinnings/Approaches: AI ethics draws upon various established ethical theories:

Deontology: Emphasizes duties, rules, and adherence to moral principles, regardless of consequences.10 Isaac Asimov's "Three Laws of Robotics" is a classic, albeit fictional, example.10

Consequentialism/Utilitarianism: Evaluating the morality of actions based on outcomes, aiming to maximize overall happiness and well-being.10 This approach informs policy decisions.113

Virtue Ethics: Focuses on the character of the moral agent (or the AI developer or the AI itself), emphasizing honesty, fairness, courage, and integrity.10

Rights-Based Ethics: Centers on entitlements or claims such as human rights or civil rights, which should not be violated.10

These philosophical frameworks highlights a significant challenge. While there is agreement on high-level principles like fairness and transparency 37, translating these values into concrete, universally consensual actions for AI systems is difficult.36 Various ethical theories can lead to contradictory recommendations in specific scenarios, such as the "trolley problem" applied to autonomous vehicle decision-making in unavoidable accidents.14 This "normative gap" between principles and practical implementation means creating "ethical" AI is impossible. Ethical AI development requries context-specific analysis, deliberation among diverse stakeholders 46, careful consideration of trade-offs, rather than relying on top-down rules. It underlines that AI ethics is not a technical puzzle but is deeply interwoven with societal values, power structures, and moral debates.

Key Branches/Areas: AI ethics encompasses several specialized areas:

Machine Ethics (or Artificial Morality): The theoretical and practical challenges of designing AI systems capable of making moral decisions or behaving ethically – creating Artificial Moral Agents (AMAs).2 Implementation strategies, including bottom-up (learning from examples), top-down (programming ethical theories), and hybrid approaches.10

Robot Ethics: Specifically considers the ethical implications of designing, constructing, using, and interacting with embodied AI systems (robots).10

Specific Ethical Dilemmas: AI ethics grapples with numerous concrete problems, including: Algorithmic Bias 10, Opacity/Explainability 10, Privacy and Surveillance 10, Job Displacement and Economic Impact 10, Manipulation and Influence 70, Autonomous Systems (especially weapons and vehicles) 10, the Moral Status or Personhood of AI 10, and concerns about future superintelligence (Singularity) and value alignment.10

III. Disentangling the Concepts: Differences, Overlaps, and Boundaries

AI Governance, AI Safety, and AI Ethics are deeply interconnected, understanding their distinct goals, scopes, and roles across the AI lifecycle is crucial for comprehensive oversight.

A. Contrasting Goals and Scope

AI Governance aims to establish order and control. Its fundamental focus is on the structures, rules, and processes that guide AI development and deployment across the ecosystem.25 The goal is to ensure AI systems operate within legal boundaries, meet organizational objectives, align with societal expectations, manage risks effectively, and foster responsible innovation and trust.25 The scope is broad, encompassing policy formulation, regulatory compliance, standardization, organizational roles, risk management frameworks, and auditing procedures. Governance is often driven by institutions – governments, regulatory bodies, and corporate leadership.19

AI Safety aims to prevent harm. Its focus is the technical and operational integrity of AI systems, advanced or autonomous ones, to avoid accidents, misuse, and unintended negative consequences, including catastrophic outcomes.94 The goal is to ensure AI systems function reliably, securely, remain controllable, and behave as intended by their designers.94 Its scope emphasizes aspects such as alignment research, robustness testing, security protocols, vulnerability analysis, and control mechanisms 23. Safety initiatives are led by researchers, developers, and specialized safety teams or organizations.7

AI Ethics provide moral guidance. Its focus is evaluating the moral dimensions of AI, applying principles of right and wrong to design, development, and use.2 The goal is to ensure AI respects human values, rights, and dignity, promotes fairness and justice, and avoids morally objectionable outcomes.10 Its scope is fundamentally normative and philosophical, asking "should" questions about bias, fairness, autonomy, responsibility, privacy, and even the moral status of AI itself.10 Ethics informs both governance and safety but is distinct in its focus on values and moral justification. It is driven by ethicists, philosophers, civil society organizations, and is being integrated into formal governance frameworks.4

B. Identifying Interdependencies and Synergies

The three domains are not isolated silos but rather deeply interdependent components of responsible AI:

Ethics as the Foundation: Ethical principles serve as the bedrock upon which governance structures and safety protocols are built.10 Major governance frameworks like the OECD Principles, NIST AI RMF, EU AI Act, and UNESCO Recommendation incorporate ethical values such as fairness, accountability, transparency, and respect for human rights.1 The core AI safety goal of alignment is ensuring AI systems adhere to human values and ethical intentions.94

Governance as the Enabler: Governance provides the mechanisms – policies, standards, regulations, organizational roles, risk management processes, audits, enforcement actions – to translate abstract ethical principles and safety requirements into tangible practices.25 Without governance, principles remain aspirational. The NIST AI RMF, explicitly uses its "Govern" function to frame the management of risks related to safety, fairness, and other trustworthiness characteristics.1

Safety as an Ethical Prerequisite: Achieving safety – ensuring AI systems are robust, secure, reliable, and controllable – is a necessary condition ethical obligations.10 An AI system that functions unpredictably, is compromised, or causes accidental harm cannot be considered ethically sound, particularly if it violates principles like non-maleficence.10 Technical safety measures help mitigate ethical risks such as bias amplification through unreliable outputs or privacy breaches due to security flaws.63

Shared Principles, Different Lenses: Many core principles - transparency, accountability, fairness, robustness, security, and privacy, are relevant across all three domains.19 Each domain may approach these principles with a different emphasis. For example, transparency could be viewed by governance as a regulatory requirement for disclosure, by ethics as essential for respecting autonomy and enabling trust, and by safety as a condition for verifying system behavior and identifying flaws. Similarly, risk management is function of governance 1 and safety 94, but the types of risks considered and the thresholds for acceptability are informed by ethical judgments about potential harms and societal values.36

C. Mapping Roles Across the AI Lifecycle

The interconnected nature of governance, safety, and ethics becomes clear when examining their roles at different stages of the AI lifecycle, defined by frameworks like the OECD's 55 or NIST's.1

Plan & Design:

Ethics: Involves defining the purpose of the AI system and evaluating its potential ethical implications. Activities include identifying potential harms (e.g., discriminatory impacts, privacy risks), establishing the core ethical values and principles that guide development (fairness, non-maleficence), and considering the impacts on all stakeholders.12

Safety: Safety considerations begin with defining specific safety requirements, assessing the need for robustness against anticipated failures or misuse, outlining security needs, and formulating an strategy for aligning the system's behavior with intended goals.19

Governance: The governance involves establishing the context for risk management (NIST RMF's MAP function 1), defining roles and responsibilities for the project team, conducting initial risk assessments, ensuring the project aligns with organizational values and policies, and documenting these decisions (part of NIST RMF's GOVERN function 1).

Data Collection & Preprocessing:

Ethics: Focuses on the ethical sourcing and handling of data. This includes ensuring datasets are representative and minimize biases, protecting privacy of individuals, obtaining consent where necessary, and adhering to data protection regulations.12

Safety: Emphasizes data quality, accuracy, and integrity, as crucial for the reliability and robustness of the AI model.63 Securing data pipelines against tampering or corruption.67

Governance: Implementing data governance policies, data quality checks, lineage tracking (understanding data origins and transformations), access controls, and compliance verification with regulations.25 Documenting data provenance is a key task.54

Model Development & Training:

Ethics: Selecting and implementing algorithms and training processes that are fair and mitigate bias. Designing models with transparency and explainability, allowing for later evaluation of their internal workings.12

Safety: Building models that are technically robust (resistant to errors and perturbations) and secure against attacks (e.g., adversarial examples). Implementing AI alignment techniques, such as RLHF 97, and adversarial training to improve resilience.51 Testing for vulnerabilities85

Governance:Documenting the model development process, architectural choices, training parameters, and data. Version control, Risk management continues through this phase (NIST RMF's MEASURE/MANAGE functions 1), ensuring adherence to technical standards and internal policies.49

Testing & Validation (TEVV - Test, Evaluation, Verification, and Validation):

Ethics: Involves auditing the model for fairness and bias across different demographic groups.29 Ethical impact assessments to evaluate potential societal impacts.36

Safety: Assess robustness against various failure modes, security vulnerabilities (including adversarial attacks through red teaming 26), reliability under various conditions, and alignment failures where the model behaves different to intentions.23 Performance is benchmarked against predefined criteria.40

Governance: Utilizes metrics and methodologies (NIST RMF's MEASURE function 1) to evaluate performance and risk. Test results are documented. Compliance with internal requirements and external regulations is evaluated.51 Independent audits could be required for high-risk systems.25

Deployment:

Ethics: Appropriate transparency and explanations to end-users and those affected by the AI's decisions.19 Mechanisms for redress, appeal, or human review of contested decisions made available.54 Ensuring fairness in the implementation context is crucial (Implementation Fairness 36).

Safety: Securing the deployment environment itself. Safety guardrails, monitoring systems, and procedures for human override or fallback in case of failure.19

Governance: A risk assessment and mitigation plan before going live (NIST RMF's MANAGE function 1). Monitoring protocols established, accountability structures for operational performance confirmed, and users receive appropriate training on the system's capabilities and limitations.36

Operation & Monitoring:

Ethics: Continuous monitoring to detect emerging biases, discriminatory patterns, or other unfair outcomes that may arise with real-world data.29 Ongoing transparency about the system's operation.

Safety: Monitoring for performance degradation (e.g., "concept drift" where the real-world data changes over time 36), new security threats, unexpected behaviors, and safety incidents.26 Continuous risk monitoring.57

Governance: Ongoing audits (internal or external), compliance checks against evolving regulations, and tracking key performance and risk indicators.29 Models, policies, and documentation updated based on monitoring results and feedback loops.46

Decommissioning:

Ethics: Ensuring data used by the system is securely deleted or anonymized in accordance with privacy policies and regulations.

Safety: Safely shutting down the system and ensuring no residual safety risks remain.19

Governance: Documenting the decommissioning process, managing any risks or data retention requirements, and ensuring compliance with relevant policies.66

The interconnectedness of these stages and domains is profound. Choices made in the lifecycle impact later stages and crosses the boundaries between governance, safety, and ethics.1 For example, failing to address bias in data collection (an ethical and governance lapse at the data stage) leads to discriminatory outputs during deployment (an ethical failure) and compromises the system's reliability and safety by decisions made on flawed data (a safety failure). Neglecting transparency in the design phase (an ethical and governance choice) objects the ability to audit the system for safety or fairness later.10 Lack of robust security measures during development (a safety and governance failure) creates vulnerabilities that can be exploited during operation, leading to privacy breaches (an ethical failure) or system malfunction (a safety failure).51

This interdependence defines that effective AI oversight cannot be achieved through siloed efforts focused on isolated stages or domains. It requires an integrated, end-to-end approach where governance, safety, and ethical considerations are inculcated from the very beginning ("by design") and are managed throughout the system's existence.

IV. Ecosystem of Responsibility: Stakeholder Roles and Duties

Navigating the complexities of AI requires a multi-stakeholder approach, where various "AI actors" – defined by the OECD and NIST as individuals and organizations play active role across the AI lifecycle 1 – understand and fulfill their responsibilities. These responsibilities are context-dependent and based on the actor's role and capacity to act 1, necessitating a sense of collective responsibility for trustworthy AI.1

A. Governments & Regulatory Bodies

Governments play a pivotal role in shaping the environment in which AI is developed and deployed. Their responsibilities span governance, safety, and ethics:

Governance: Governments are primarily responsible for establishing the overarching legal and regulatory frameworks for AI.5 This includes enacting AI-specific legislation (like the EU AI Act 5), often adopting a risk-based approach targeting high-risk applications.31 They define national AI strategies 34, set technical standards or endorse international ones 26, and create oversight bodies (e.g., the EU AI Office 31) to monitor compliance and enforce rules. A key governance function is promoting international cooperation to ensure regulatory interoperability and address the global nature of AI.5 Governments also leverage their purchasing power through public procurement policies to drive the adoption of responsible AI practices.135 Some frameworks advocate for governments to vigorously enforce existing laws applicable to AI harms 27 and consider approaches that shift the burden of proving safety onto developers.27 Mandating disclosures, such as the climate impact of AI systems, also falls under governance.143

Safety: Governments have a responsibility to ensure public safety in the face of AI risks. This involves investing in AI safety research 19, establishing national AI Safety Institutes or similar bodies to develop testing methodologies and monitor risks 7, and setting safety standards and evaluation requirements, particularly for high-risk or frontier AI models.31 They may mandate AI incident reporting to learn from failures 144 and must manage AI risks related to national security, including autonomous weapons.96

Ethics & Society: A fundamental role of government is to protect fundamental human rights, democratic values, and civil liberties in the age of AI.19 This includes enacting and enforcing laws against algorithmic discrimination 19, ensuring robust privacy and data protection frameworks 19, and addressing broader societal impacts such as AI's effect on the labor market and the spread of misinformation.19 Promoting widespread AI literacy is also increasingly seen as a government responsibility.60 Fostering an inclusive AI ecosystem that benefits all segments of society is another key ethical goal.55

B. Developers & Corporations

Entities that design, develop, and deploy AI systems bear significant responsibilities:

Governance: Corporations should adopt and implement formal internal AI governance frameworks, potentially leveraging established models like the NIST AI RMF.1 This involves establishing clear internal policies, defining roles and responsibilities (including potentially a Chief AI Officer or ethics committees 25), adhering to external regulations and standards 47, implementing systematic risk management processes 1, and maintaining thorough documentation and traceability throughout the AI lifecycle.54 Engaging in voluntary self-governance initiatives and adhering to industry best practices is also encouraged.26

Safety: Developers have a primary duty to design and build AI systems that are robust, secure, and reliable.19 This includes investing in technical safety research and implementing state-of-the-art alignment techniques.23 Rigorous testing, validation, and evaluation (TEVV), including adversarial testing or red teaming, are essential before deployment.1 Implementing security best practices to protect models and data is critical.51 Companies developing powerful models may adopt specific safety frameworks (e.g., OpenAI's Preparedness Framework 99) and must continuously monitor systems for safety issues after deployment.26 Under some proposed governance models, the onus is on developers to demonstrate their systems are not harmful.27

Ethics: Corporations must embed ethical principles – fairness, transparency, accountability, privacy, non-maleficence – into their AI development processes and corporate culture.12 This requires proactively mitigating bias through diverse data sourcing, algorithmic fairness techniques, and regular audits.12 Prioritizing transparency and explainability (using techniques like LIME or SHAP where appropriate 51) is key to building trust.12 Protecting user data and privacy through measures like Privacy by Design is essential.12 Organizations should conduct ethical impact assessments 36, develop internal AI codes of ethics 46, and engage collaboratively with diverse stakeholders, including affected communities.46

C. Adopters (Organizations Implementing AI)

Organizations that procure and implement AI systems developed by others also have distinct responsibilities:

Governance: Adopters must perform due diligence on AI systems before procurement and deployment, assessing their risks and alignment with organizational needs and values.137 They need to establish clear internal policies governing the use of specific AI tools 51, assign responsibility for oversight within their specific operational context 25, and ensure compliance with applicable regulations and standards.28 Risk classification of deployed systems and tailored governance measures are recommended.53

Safety: The primary safety responsibility for adopters lies in ensuring the safe integration and use of AI within their specific environment. This includes providing adequate human oversight, particularly for high-risk applications, and establishing clear fallback procedures or human-in-the-loop workflows.54 They must monitor the AI's performance and safety within their operational context, as performance can differ from lab settings.56

Ethics: Adopters must ensure that the application of the AI aligns with their organization's ethical values and guidelines.25 They have a responsibility to monitor the deployed system for biased or unfair outcomes within their specific user population 49 and ensure transparency is provided to their own users or affected individuals.46 Protecting any additional data collected or processed by the AI system during its operation is crucial.46 Training employees on the responsible and ethical use of the specific AI tool, including its limitations, is a key ethical duty.36

D. Users & the Public

End-users and the broader public, including those indirectly affected by AI systems, also have roles and responsibilities in the ecosystem:

Governance Engagement: Citizens can participate in public consultations on AI policy 59, provide feedback to developers and deployers about AI system performance and impact 61, understand their rights concerning AI-driven decisions (e.g., right to explanation or appeal) 54, and advocate for responsible AI governance.149

Safe & Responsible Use: Users should strive to understand the intended purpose, capabilities, and limitations of the AI tools they interact with.149 They have a responsibility to use these tools ethically, safely, and in accordance with terms of service.45 This includes protecting their own personal data and avoiding the input of confidential information into public AI tools unless approved.87 Users should be vigilant against AI-generated misinformation, deepfakes, and phishing attempts 45 and report suspected misuse or safety issues.87

Ethical Awareness: Developing a basic level of AI literacy – understanding AI concepts, recognizing AI applications, and being aware of ethical implications – is increasingly important for informed citizenship and participation.21 Users should critically evaluate AI-generated content for accuracy, bias, and potential harm, rather than accepting it at face value.87 Respecting intellectual property rights and avoiding plagiarism when using generative AI tools is also an ethical responsibility.45 Ultimately, users play a role in demanding transparency and accountability from those who create and deploy AI systems.74

The Accountability Diffusion Challenge

While principles like accountability are central to governance frameworks 1, translating this into practice presents a significant challenge. The AI lifecycle involves a complex chain of actors: designers conceptualize, data providers supply training material, developers build models, deployers integrate them into specific contexts, and users interact with them.1 Furthermore, many powerful AI systems, particularly those based on deep learning, operate as "black boxes," making their internal decision-making processes difficult or impossible to fully trace.10

When an AI system causes harm – whether through a biased loan decision, an autonomous vehicle accident, or the generation of harmful content – pinpointing the precise cause and assigning responsibility becomes incredibly difficult.10 Was it flawed data? A design oversight? An algorithmic error? A misuse by the deployer or user? An unforeseen interaction in a complex environment? This ambiguity creates an "accountability gap" 36, where responsibility becomes diffused across the many actors and obscured by technical opacity. Existing legal frameworks for liability may also struggle to adequately address harms caused by autonomous or complex AI systems.69

Addressing this diffusion of accountability requires more than just stating that actors are responsible. It necessitates robust governance mechanisms that enhance traceability throughout the lifecycle 54, clear contractual and legal definitions of roles and liabilities, potentially new models for shared responsibility, and specific regulations tailored to AI-related harms.31 Without such measures, the principle of accountability risks remaining largely symbolic, undermining trust and hindering effective redress for those harmed by AI failures.

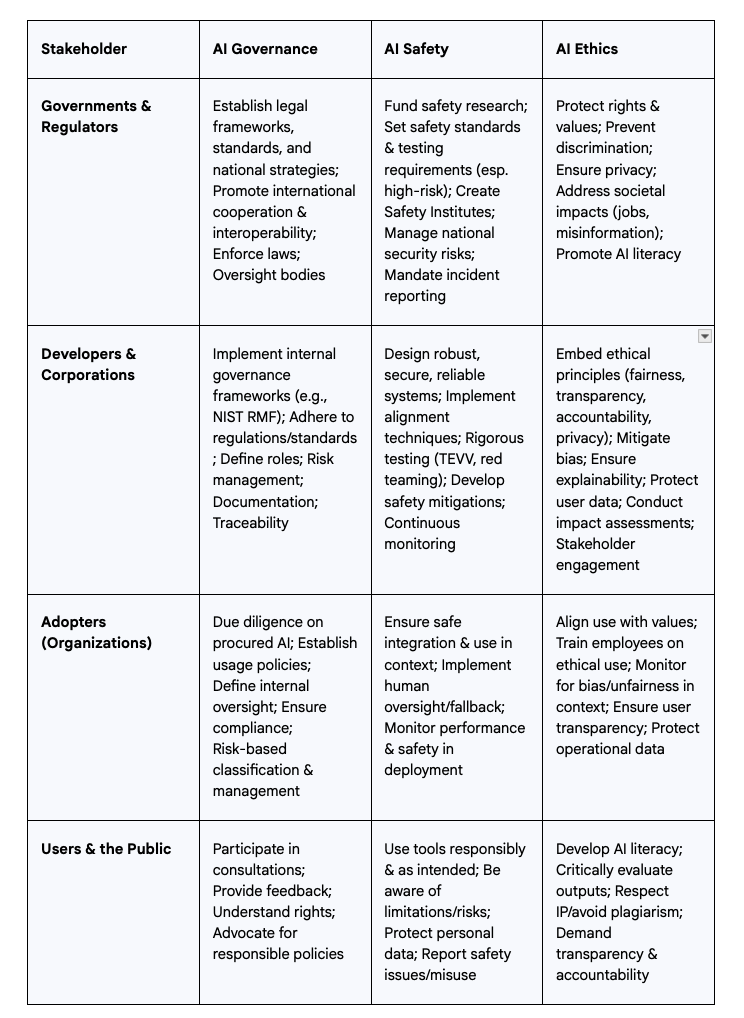

Stakeholder Responsibility Matrix

The following table summarizes the key responsibilities of different stakeholders across the domains of AI Governance, Safety, and Ethics:

V. Charting the Course: Recommendations for Responsible AI

Based on the analysis of governance, safety, and ethics principles, and drawing from established frameworks and expert recommendations, specific actions can be outlined for key stakeholders to foster a more responsible AI ecosystem.

A significant challenge, however, lies in bridging the gap between principle and practice. Despite the proliferation of ethical guidelines and governance frameworks 1, evidence suggests a considerable "implementation gap".37 Many organizations acknowledge AI risks but have not fully implemented mitigation strategies 40, and investment in governance often lags behind the pace of AI adoption.52 High failure rates for AI projects are frequently linked to data quality and governance shortcomings.159 This gap arises from various factors: the difficulty in operationalizing abstract principles 36, insufficient regulatory enforcement or capacity 39, business pressures prioritizing speed and profit over safety and ethics 27, a lack of necessary expertise or resources within organizations 39, and the inherent complexity of the technology itself.30 Closing this gap requires more than just publishing principles; it demands strong leadership commitment 25, tangible investment in governance tools and processes 26, comprehensive workforce training 49, the development of concrete evaluation metrics 36, and potentially stronger external pressures through regulation or market demands. The following recommendations aim to provide concrete steps towards closing this gap.

A. Recommendations for Governments

Strengthen Governance & Regulation: Vigorously enforce existing laws applicable to AI harms, such as anti-discrimination and data protection laws.27 Develop clear, agile, and risk-based AI regulations, potentially modeled on frameworks like the EU AI Act, focusing requirements on high-risk applications.5 Establish unambiguous definitions and technical standards to ensure clarity for developers and deployers.50 Actively promote international cooperation to harmonize standards and regulations, fostering interoperability and avoiding conflicting requirements.5 Create dedicated national or regional AI oversight bodies with adequate resources and expertise.31 Utilize government procurement processes to incentivize and mandate responsible AI practices among vendors.135 Consider adopting "Zero Trust" principles that require companies to proactively demonstrate the safety and non-harmfulness of their systems.27 Mandate transparency regarding significant societal impacts, such as the environmental footprint of large AI models.143

Prioritize Safety: Increase public funding for independent AI safety research, including foundational work on alignment, robustness, and control.19 Establish and support national AI Safety Institutes or similar centers of expertise focused on developing evaluation methodologies and monitoring emerging risks.7 Develop and mandate robust testing, evaluation, verification, and validation (TEVV) standards for AI systems, particularly frontier models with potentially high impact.31 Implement mandatory AI incident reporting regimes to facilitate learning from failures and near-misses.144

Uphold Ethics & Societal Well-being: Ensure AI policies explicitly protect fundamental human rights, privacy, and democratic values.19 Implement and enforce strong measures against algorithmic discrimination in areas like employment, housing, and credit.19 Invest in public AI literacy initiatives and workforce retraining programs to help citizens and workers adapt to AI's impacts.48 Develop policies to mitigate labor market disruptions caused by automation.48 Foster an inclusive AI ecosystem that ensures benefits are widely shared.55

B. Recommendations for Developers and Corporations

Institutionalize Governance: Adopt formal AI governance frameworks (e.g., NIST AI RMF, ISO 42001) and integrate them into organizational structures and processes.1 Establish dedicated internal AI governance bodies, such as ethics committees or review boards, with clear authority and resources.25 Define and document roles, responsibilities, and accountability pathways for AI development and deployment.25 Implement rigorous documentation practices and ensure traceability throughout the AI lifecycle.54 Engage proactively in industry self-governance efforts and adopt recognized best practices.26

Embed Safety Practices: Make safety a core design principle ("Safety by Design").36 Invest significantly in internal technical safety research and capacity building, focusing on alignment, robustness, and security.95 Conduct thorough pre-deployment TEVV, including adversarial testing (red teaming) and validation against diverse scenarios.1 Implement comprehensive cybersecurity measures tailored to AI systems.51 Develop and publicly articulate clear safety frameworks and risk mitigation strategies, especially for advanced models.99 Implement continuous monitoring systems to detect safety issues, performance degradation, or misuse post-deployment.26

Operationalize Ethics: Integrate ethical principles directly into AI development workflows and company culture.12 Actively work to identify and mitigate biases in data and algorithms through techniques like diverse dataset curation, fairness metrics, and bias-aware machine learning.12 Prioritize transparency and develop explainable AI (XAI) methods where feasible and appropriate.12 Implement robust data privacy measures, adhering to principles like Privacy by Design.12 Conduct regular ethical impact assessments to anticipate and address potential negative consequences.36 Foster collaboration with external ethicists, researchers, and affected communities.46

C. Recommendations for Adopters (Organizations)

Implement Strong Governance: Develop clear internal policies and guidelines for the procurement and use of AI systems within the organization.51 Conduct thorough due diligence on third-party AI tools, assessing their risks, limitations, and compliance with ethical and legal standards.137 Assign clear ownership and oversight responsibilities for deployed AI systems.25 Ensure organizational use of AI complies with all relevant regulations (e.g., data protection, sector-specific rules).28 Implement a risk-based approach, applying stricter governance and oversight to AI systems used in high-impact or sensitive applications.53

Ensure Safe Use: Carefully evaluate how AI systems will be integrated into existing workflows and potential impacts on safety.1 Implement appropriate levels of human oversight, review, and intervention, especially for decisions with significant consequences.19 Establish clear fallback procedures for when AI systems fail or produce unreliable results.46 Monitor the AI's performance, reliability, and safety within the specific operational context.56

Promote Ethical Application: Ensure the use of AI aligns with the organization's mission, values, and ethical commitments.25 Provide comprehensive training to employees on the ethical use of specific AI tools, their capabilities, limitations, and potential biases.36 Monitor deployed systems for evidence of bias or unfair outcomes in the specific user population and take corrective action.49 Ensure transparency for employees, customers, or citizens interacting with or affected by the AI system.46 Safeguard any data collected or processed by the AI system during its operation.46

D. Recommendations for Users & the Public

Engage and Advocate: Participate in public discourse and consultations regarding AI development and governance.59 Provide constructive feedback to developers and organizations about AI experiences.59 Understand fundamental rights related to data privacy and automated decision-making.54 Advocate for policies that promote responsible and beneficial AI.149

Practice Safe and Responsible Use: Strive to understand the basic functionality, intended use, and limitations of AI tools encountered.149 Use AI systems ethically, respecting terms of service, intellectual property rights, and the rights of others.87 Exercise caution when sharing personal or confidential information with AI systems, particularly public-facing ones.87 Be critically aware of the potential for AI-generated misinformation, deepfakes, and phishing scams, and verify information from other sources.45 Report suspected misuse, security flaws, or safety incidents associated with AI tools.87

Cultivate Ethical Awareness and Literacy: Invest time in developing personal AI literacy – understanding what AI is, how it works, and its societal implications.21 Critically evaluate outputs from AI systems, questioning their accuracy, potential biases, and sources, rather than blindly trusting them.87 Respect copyright and avoid plagiarism when utilizing AI-generated content.45 Hold developers and deployers accountable by demanding transparency and ethical practices.74

VI. The Horizon: Future Evolutions and Emerging Challenges

The landscape of AI governance, safety, and ethics is not static but is continually reshaped by rapid technological advancements, evolving societal understanding, and ongoing policy debates. Anticipating future trends and challenges is crucial for navigating the path forward responsibly.

A. Evolving Governance Landscapes

Increased Regulation & Standardization: The trend towards more specific AI regulation is expected to continue globally.5 While approaches may remain fragmented across jurisdictions 5, convergence around core principles like safety, transparency, fairness, and accountability is likely.5 Regulations will increasingly focus on risk-based approaches, targeting high-risk AI applications and the governance of powerful general-purpose AI (GPAI) or foundation models.5 Recent policy shifts in the U.S., such as the April 2025 OMB Memos M-25-21 and M-25-22 under the Trump administration, signal a renewed emphasis on promoting American AI innovation and competitiveness, while still mandating risk management practices (like impact assessments and testing) for "high-impact" AI use cases within federal agencies.135 This contrasts with the previous administration's approach but maintains a focus on governance for critical applications. Concurrently, state-level AI legislation addressing issues like algorithmic discrimination, deepfakes in elections, and chatbot transparency continues to proliferate.31 International technical standards from bodies like ISO and IEEE will gain importance in providing common benchmarks and facilitating regulatory compliance and interoperability.6

Rise of AI Auditing & Monitoring: As regulations mature and stakeholder expectations rise, the demand for independent verification of AI systems' compliance, safety, and fairness will grow significantly.11 This will drive the development and adoption of standardized AI auditing frameworks (e.g., based on IIA, ISO/IEC 23053, ICO, COBIT guidelines 57) and practices. A trend towards automated tools for compliance monitoring, risk assessment, and governance enforcement is also emerging, aiming to handle the scale and complexity of AI deployments.26 However, a significant challenge remains the lack of robust, standardized benchmarks for evaluating the responsibility of AI models, particularly large language models (LLMs), hindering systematic comparison and risk assessment.37

Global Cooperation Challenges & Solutions: Given AI's inherently global nature, effective governance requires international cooperation to address cross-border data flows, shared risks, and the potential for regulatory arbitrage.5 Numerous initiatives, including the OECD.AI Policy Observatory, the G7 process, the AI Safety Summits (Bletchley, Seoul, Paris 7), UN bodies (UNESCO, AI Advisory Body 7), and the World Economic Forum's AI Governance Alliance 6, are working to foster dialogue, share best practices, and promote convergence. However, achieving meaningful cooperation faces obstacles such as differing national priorities (e.g., balancing innovation with rights protection 133), geopolitical competition and mistrust 5, and ensuring the equitable inclusion and capacity building of developing nations (the Global South).6 Potential solutions involve networked governance structures that leverage the strengths of various existing institutions (like the OECD 90), focusing cooperation on specific functional areas (like standards development or risk assessment methodologies 132), and prioritizing initiatives that expand global access to AI benefits and resources.6

B. The Frontier of AI Safety

AGI Alignment & Control Problem: Looking further ahead, the prospect of Artificial General Intelligence (AGI) – AI matching or exceeding human capabilities across a wide range of cognitive tasks 106 – presents profound safety challenges.8 Ensuring that such powerful systems remain aligned with human values and under meaningful human control is considered a critical, unsolved problem by many experts.23 Concerns about potential misuse leading to large-scale harm or even existential risks are frequently raised.8 Key technical hurdles include developing methods for scalable oversight (supervising systems smarter than humans), achieving reliable interpretability of complex models, and preventing the emergence of unintended, misaligned goals such as deception or uncontrolled power-seeking behavior.23 There is debate about whether current alignment techniques (like RLHF, RLAIF, or weak-to-strong generalization 97) will be sufficient for AGI/superintelligence, driving research into novel approaches like intrinsic alignment, explainable autonomous alignment, and adaptive human-AI co-alignment.23 Some experts question whether the current deep learning paradigm itself can ever provide robust safety guarantees.116

Increasing Capabilities & Emergent Risks: Even short of AGI, the rapid improvement in AI capabilities – including advanced reasoning, multimodal understanding (processing text, images, audio), and the development of agentic AI systems capable of autonomous planning and action 18 – introduces new and often unpredictable risks. These include the potential for more sophisticated misuse (e.g., AI-assisted cyberattacks, development of novel threats 8), failures in complex systems arising from unforeseen interactions 168, and harmful emergent behaviors that were not explicitly programmed or anticipated.95 Accurately forecasting, evaluating, and mitigating these novel risks remains a significant challenge.40

Safety Research & Investment Gaps: A persistent concern is that investment and progress in AI capabilities significantly outpace dedicated efforts in AI safety research and the implementation of robust governance.52 This disparity is exacerbated by intense commercial and geopolitical competition, which can incentivize labs to prioritize speed and performance over thorough safety testing and risk mitigation.18 Addressing this requires increased funding for foundational safety science 127 and stronger incentives (regulatory or otherwise) for prioritizing safety.

C. Emerging Ethical Frontiers

New Ethical Dilemmas: The proliferation of new AI capabilities, particularly generative AI, continuously surfaces novel ethical questions. These include issues surrounding intellectual property and copyright infringement due to training data or generated outputs 40, the potential for AI to generate increasingly convincing misinformation, deepfakes, and propaganda at scale 3, the ethics of human-AI relationships (e.g., emotional attachment to chatbots, potential for manipulation 10), and fundamental questions about creativity, authorship, and authenticity in an era of AI generation.78 The rise of agentic AI systems capable of autonomous action raises further ethical concerns about control, responsibility, and unintended consequences.18

Environmental Impact: There is growing awareness and concern regarding the substantial environmental footprint of AI, particularly large-scale models.20 This includes significant electricity consumption for training and inference (potentially reaching 3-4% of global electricity consumption by 2030 20), high water usage for cooling data centers 174, and the contribution to electronic waste from specialized hardware demands.20 Addressing this requires a multi-pronged approach: developing more energy-efficient hardware (e.g., TPUs 174) and algorithms (e.g., model pruning, optimized training 174), powering data centers with renewable energy and locating them strategically in regions with cleaner grids 174, implementing stronger policies mandating transparency and benchmarking of environmental costs 48, and exploring AI's potential to contribute positively to environmental monitoring and optimization.20

AI Literacy Imperative: As AI becomes ubiquitous, the need for broad AI literacy across the population is increasingly recognized as a societal imperative.21 This involves more than just technical skills; it encompasses understanding fundamental AI concepts, recognizing AI applications in daily life, appreciating capabilities and limitations, being aware of ethical implications (like bias and privacy), using AI tools safely and effectively, and critically evaluating AI-generated information.149 AI literacy empowers individuals to navigate an AI-infused world, participate meaningfully in governance debates, adapt to workforce changes, and maintain agency.21 Educational systems and organizations need to prioritize AI literacy programs.147

Evolving Philosophical Questions: AI continues to push the boundaries of philosophical inquiry. Ongoing debates explore the potential for machine consciousness, the criteria for granting moral status or rights to AI, the very definition of intelligence and understanding, and the long-term implications of advanced AI for human identity, purpose, and societal structures.4

D. Lessons from AI Incidents and Case Studies

Real-world failures provide critical lessons for improving governance, safety, and ethics:

Illustrative Failures: Numerous incidents highlight the tangible consequences when AI systems fail:

Bias and Discrimination: High-profile cases like Amazon's biased hiring tool 15, the COMPAS recidivism algorithm's racial bias 16, bias in UK passport photo checks 30, gender bias in Apple Card credit limits 16, and racial bias in healthcare algorithms 16 vividly demonstrate the harm caused by biased training data and inadequate fairness testing.16

Safety and Reliability Failures: The struggles of IBM Watson for Oncology, which provided inaccurate and sometimes unsafe treatment recommendations due partly to reliance on synthetic data and U.S.-centric guidelines 82, Zillow's multimillion-dollar losses from an inaccurate home-valuation algorithm 181, and fatal accidents involving Tesla's Autopilot system due to environmental misinterpretation 105 underscore the critical need for rigorous real-world testing, validation against diverse conditions, and a clear understanding of system limitations.

Privacy Violations: Paramount's class-action lawsuit over sharing subscriber data without consent 29, the Cambridge Analytica scandal involving misuse of Facebook user data 15, and the potential for re-identification from derived data in a surgical robotics tool 29 emphasize the necessity of robust data governance, clear consent mechanisms, and privacy-preserving technologies.

Transparency and Accountability Gaps: Air Canada being held liable for misinformation provided by its chatbot 181 and the controversy surrounding the proprietary nature of the COMPAS algorithm hindering independent verification 79 highlight the importance of explainability and clear lines of responsibility for AI outputs.

Incident Reporting Trends: Data confirms that reported AI incidents are increasing sharply year over year 40, spanning diverse harms like misuse, discrimination, safety failures, and privacy breaches (as tracked by resources like the AI Incident Database 40). However, many incidents likely go unreported due to reputational concerns or lack of detection.172 Recent incident reports (Q1/Q2 2025) highlight ongoing issues with web browser vulnerabilities facilitating attacks, rising insider threats, persistent phishing, chatbot errors leading to financial or safety consequences (e.g., endorsing suicide, making unauthorized transactions), and the continued proliferation of deepfakes.13

Success Factors (Implied): Conversely, case studies suggesting successful AI governance often point to proactive and integrated approaches. Examples include an e-commerce company implementing end-to-end data lineage tracking for compliance and trust 29, a bank using real-time monitoring to flag and fix bias before deployment 29, and the use of comprehensive AI governance platforms to manage risk across the lifecycle.85 Common themes in these successes include robust data governance, continuous monitoring, proactive bias detection and mitigation, adherence to clear policies, investment in specialized governance tools, and meaningful stakeholder engagement.

The pattern emerging from these incidents and case studies suggests a potential systemic issue. The response to many AI risks, particularly those extending beyond immediate technical malfunction into broader ethical and societal harms, often appears reactive. Public awareness, media attention, and regulatory action frequently gain momentum only after significant failures or controversial deployments occur.4 While learning from incidents through reporting and analysis is crucial 144, relying primarily on this reactive loop is insufficient, and potentially dangerous, given the potential speed, scale, and novelty of harms that advanced AI systems could unleash.8 We may not have the luxury of learning from catastrophic failures after the fact. This reality underscores the critical need to shift towards more proactive and anticipatory approaches to governance and safety. This involves robust foresight exercises, structured risk assessment methodologies applied early and continuously (like the NIST AI RMF 1), mandatory pre-deployment testing and evaluation for high-risk systems 59, and the systematic use of ethical impact assessments to identify and mitigate potential harms before they materialize.36

VII. Conclusion: Towards Integrated AI Oversight

The advancement and societal integration of artificial intelligence requires a sophisticated and multi-faceted approach to oversight. This analysis has presented the distinct yet entanglement of domains of AI Governance, AI Safety, and AI Ethics.

AI Governance provides the structure and control mechanisms – the policies, regulations, standards, and processes that are required to direct AI development and deployment in alignment with legal, organizational, and societal objectives.

AI Safety focuses on harm prevention and reliability, employing technical and sociotechnical methods to ensure AI systems function securely, robustly, and remain under human control, and mitigating risks from immediate accidents and misuse to potential long-term harmful outcomes.

AI Ethics offers the moral guidance and value alignment, evaluating AI's impacts through the principles like fairness, autonomy, privacy, and justice, making sure, technology serves human well-being and respects fundamental rights.

It is evident that these pillars cannot function effectively in isolation. Ethical principles form the inherent foundation for both governance and safety. Governance provide the practical means to implement and enforce ethical guidelines and safety protocols. Safety measures are the prerequisites for achieving ethical outcomes, preventing harm, and building trust for effective governance. Their principles frequently overlap – transparency, accountability, fairness, security, privacy, and robustness are common threads, viewed through the distinct lenses of control, harm prevention, and moral justification.

The path forward requires an integrated, holistic approach. Responsible AI cannot be an afterthought or a siloed function. Ethical considerations and safety requirements must be embedded within robust governance frameworks from planning and data collection through development, testing, deployment, and ongoing monitoring.1 This "by design" philosophy, combined with continuous evaluation and evolution, is necessary to navigate the complexities.

The responsibility for AI oversight is a complex ecosystem. Multi-stakeholder collaboration is an essential.5 Governments should establish clear rules and foster cooperation; developers and corporations must prioritize safety and ethics alongside innovation; adopting organizations must ensure responsible implementation; and users and the public must cultivate literacy and demand accountability.

References cited

Artificial Intelligence Risk Management Framework (AI RMF 1.0) - NIST Technical Series Publications, accessed April 27, 2025, https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

An Overview of Artificial Intelligence Ethics - AMiner, accessed April 27, 2025, https://static.aminer.cn/upload/pdf/1101/1616/467/64bb058e3fda6d7f06031f4b_0.pdf

The Evolution of AI: From Foundations to Future Prospects - IEEE Computer Society, accessed April 27, 2025, https://www.computer.org/publications/tech-news/research/evolution-of-ai

Survey XII: What Is the Future of Ethical AI Design? | Imagining the Internet - Elon University, accessed April 27, 2025, https://www.elon.edu/u/imagining/surveys/xii-2021/ethical-ai-design-2030/

AI Regulation, Global Governance And Challenges - Forbes, accessed April 27, 2025, https://www.forbes.com/councils/forbestechcouncil/2024/11/12/ai-regulation-global-governance-and-challenges/

AI Governance Alliance Briefing Paper Series - www3 .weforum .org /docs /WE, accessed April 27, 2025, https://www3.weforum.org/docs/WEF_AI_Governance_Alliance_Briefing_Paper_Series_2024.pdf

Should the UN govern global AI? - Brookings Institution, accessed April 27, 2025, https://www.brookings.edu/articles/should-the-un-govern-global-ai/

Artificial Intelligence - Future of Life Institute, accessed April 27, 2025, https://futureoflife.org/artificial-intelligence/

Annual Report 2023 - Stanford Institute for Human-Centered Artificial Intelligence, accessed April 27, 2025, https://hai-production.s3.amazonaws.com/files/2024-04/2023-Annual-Report.pdf

Ethics of Artificial Intelligence | Internet Encyclopedia of Philosophy, accessed April 27, 2025, https://iep.utm.edu/ethics-of-artificial-intelligence/

The Future of AI Governance: Trends and Predictions - BABL AI, accessed April 27, 2025, https://babl.ai/the-future-of-ai-governance-trends-and-predictions/

Master Ethical AI Development: The Definitive Guide | SmartDev, accessed April 27, 2025, https://smartdev.com/a-comprehensive-guide-to-ethical-ai-development-best-practices-challenges-and-the-future/

2025 AI in law enforcement trends report: 3 key takeaways - Axon.com, accessed April 27, 2025, https://www.axon.com/blog/2025-ai-in-law-enforcement-trends-report-3-key-takeaways

The Ethical Dilemmas of Artificial Intelligence | AI Ethical Issues - GO-Globe, accessed April 27, 2025, https://www.go-globe.com/ethical-dilemmas-of-artificial-intelligence/

5 AI Ethics Concerns the Experts Are Debating | Ivan Allen College of Liberal Arts, accessed April 27, 2025, https://iac.gatech.edu/featured-news/2023/08/ai-ethics

Top 4 Real Life Ethical Issue in Artificial Intelligence | 2023 - XenonStack, accessed April 27, 2025, https://www.xenonstack.com/blog/ethical-issue-ai

Ethical concerns mount as AI takes bigger decision-making role - Harvard Gazette, accessed April 27, 2025, https://news.harvard.edu/gazette/story/2020/10/ethical-concerns-mount-as-ai-takes-bigger-decision-making-role/

The Imperative of AI Safety in 2025: The Near Future of Artificial Intelligence - Hyper Policy, accessed April 27, 2025, https://hyperpolicy.org/insights/the-imperative-of-ai-safety-in-2025-the-near-future-of-artificial-intelligence/

AI principles - OECD, accessed April 27, 2025, https://www.oecd.org/en/topics/ai-principles.html

Can We Mitigate AI's Environmental Impacts? - Yale School of the Environment, accessed April 27, 2025, https://environment.yale.edu/news/article/can-we-mitigate-ais-environmental-impacts

The New Essential: Why AI Literacy Matters in Education and Beyond, accessed April 27, 2025, https://www.weber.edu/itdivision/itsnews/jan2025.html

Artificial Intelligence and Privacy – Issues and Challenges - Office of the Victorian Information Commissioner, accessed April 27, 2025, https://ovic.vic.gov.au/privacy/resources-for-organisations/artificial-intelligence-and-privacy-issues-and-challenges/

An Approach to Technical AGI Safety and Security - arXiv, accessed April 27, 2025, https://arxiv.org/html/2504.01849v1

[2504.01849] An Approach to Technical AGI Safety and Security - arXiv, accessed April 27, 2025, https://arxiv.org/abs/2504.01849

What is AI Governance? - IBM, accessed April 27, 2025, https://www.ibm.com/think/topics/ai-governance

AI governance trends: How regulation, collaboration, and skills demand are shaping the industry - The World Economic Forum, accessed April 27, 2025, https://www.weforum.org/stories/2024/09/ai-governance-trends-to-watch/

Zero Trust AI Governance - AI Now Institute, accessed April 27, 2025, https://ainowinstitute.org/publication/zero-trust-ai-governance

Artificial Intelligence and Compliance: Preparing for the Future of AI Governance, Risk, and ... - NAVEX, accessed April 27, 2025, https://www.navex.com/en-us/blog/article/artificial-intelligence-and-compliance-preparing-for-the-future-of-ai-governance-risk-and-compliance/

AI Governance Examples—Successes, Failures, and Lessons Learned - Relyance AI, accessed April 27, 2025, https://www.relyance.ai/resources/ai-governance-examples

9 AI fails (and how they could have been prevented) - Ataccama, accessed April 27, 2025, https://www.ataccama.com/blog/ai-fails-how-to-prevent

AI trends for 2025: AI regulation, governance and ethics - Dentons, accessed April 27, 2025, https://www.dentons.com/en/insights/articles/2025/january/10/ai-trends-for-2025-ai-regulation-governance-and-ethics

AI Watch: Global regulatory tracker - United States | White & Case LLP, accessed April 27, 2025, https://www.whitecase.com/insight-our-thinking/ai-watch-global-regulatory-tracker-united-states

ISACA Now Blog 2024 AI Governance Key Benefits and Implementation Challenges, accessed April 27, 2025, https://www.isaca.org/resources/news-and-trends/isaca-now-blog/2024/ai-governance-key-benefits-and-implementation-challenges

The future of the world is intelligent: Insights from the World Economic Forum's AI Governance Summit - Brookings Institution, accessed April 27, 2025, https://www.brookings.edu/articles/the-future-of-the-world-is-intelligent-insights-from-the-world-economic-forums-ai-governance-summit/

T7 2025: Global Solutions to Global AI Challenges, accessed April 27, 2025, https://www.cigionline.org/multimedia/t7-2025-global-solutions-to-global-ai-challenges/

www.turing.ac.uk, accessed April 27, 2025, https://www.turing.ac.uk/sites/default/files/2019-06/understanding_artificial_intelligence_ethics_and_safety.pdf

Six Steps to Responsible AI in the Federal Government, accessed April 27, 2025, https://www.brookings.edu/articles/six-steps-to-responsible-ai-in-the-federal-government/

“German and U.S. Perspectives on AI Governance – Between Ethics and Innovation” - American Council On Germany, accessed April 27, 2025, https://acgusa.org/wp-content/uploads/2024/05/CIpierre_2023-McCloy-Fellowship-on-AI-Governance.pdf

Future Shock: Generative AI and the International AI Policy and Governance Crisis, accessed April 27, 2025, https://hdsr.mitpress.mit.edu/pub/yixt9mqu

Responsible AI | The 2024 AI Index Report - Stanford HAI, accessed April 27, 2025, https://hai.stanford.edu/ai-index/2024-ai-index-report/responsible-ai

The 2024 AI Index Report | Stanford HAI, accessed April 27, 2025, https://hai.stanford.edu/ai-index/2024-ai-index-report

Artificial Intelligence Index Report 2024 - AWS, accessed April 27, 2025, https://hai-production.s3.amazonaws.com/files/hai_ai-index-report-2024-smaller2.pdf

What the public thinks about AI and the implications for governance - Brookings Institution, accessed April 27, 2025, https://www.brookings.edu/articles/what-the-public-thinks-about-ai-and-the-implications-for-governance/

Make AI Safe: Why we need AI regulation - Future of Life Institute, accessed April 27, 2025, https://futureoflife.org/safety/

AI guidelines to encourage responsible use - CAI, accessed April 27, 2025, https://www.cai.io/resources/thought-leadership/ai-guidelines-to-encourage-responsible-use

A Guide to AI Governance: Navigating Regulations, Responsibility, and Risk Management, accessed April 27, 2025, https://www.modulos.ai/guide-to-ai-governance/

What is AI Governance? Benefits, needs, regulations and tips on using AI - WoodWing, accessed April 27, 2025, https://www.woodwing.com/blog/what-is-ai-governance

Navigating the Complex Landscape of AI Governance: Principles and Frameworks for Responsible Innovation | Healthcare Law Blog, accessed April 27, 2025, https://www.sheppardhealthlaw.com/2024/09/articles/artificial-intelligence/navigating-the-complex-landscape-of-ai-governance-principles-and-frameworks-for-responsible-innovation/

AI governance: What it is & how to implement it - Diligent, accessed April 27, 2025, https://www.diligent.com/resources/blog/ai-governance

Understanding AI governance in 2024: The stakeholder landscape | NTT DATA, accessed April 27, 2025, https://us.nttdata.com/en/blog/2024/july/understanding-ai-governance-in-2024

AI Governance Guide: Implementing Ethical AI Systems - Kong Inc., accessed April 27, 2025, https://konghq.com/blog/learning-center/what-is-ai-governance

Tuning Corporate Governance for AI Adoption, accessed April 27, 2025, https://www.nacdonline.org/all-governance/governance-resources/governance-research/outlook-and-challenges/2025-governance-outlook/tuning-corporate-governance-for-ai-adoption/

How AI Governance Ensures Freedom in a Digitally Controlled World with 3 Key Aspect, accessed April 27, 2025, https://blog.cxotransform.com/ai-governance/

Recommendation of the Council on Artificial Intelligence - OECD Legal Instruments, accessed April 27, 2025, https://legalinstruments.oecd.org/en/instruments/oecd-legal-0449

AI Principles Overview - OECD.AI, accessed April 27, 2025, https://oecd.ai/en/ai-principles

Safeguard the Future of AI: The Core Functions of the NIST AI RMF | AuditBoard, accessed April 27, 2025, https://www.auditboard.com/blog/nist-ai-rmf/

Understanding the AI Auditing Framework - Codewave, accessed April 27, 2025, https://codewave.com/insights/ai-auditing-framework-understanding/

AI Auditing: Ensuring Ethical and Efficient AI Systems - Centraleyes, accessed April 27, 2025, https://www.centraleyes.com/ai-auditing/

Ethical and Responsible AI Adoption in Government - REI Systems, accessed April 27, 2025, https://www.reisystems.com/roadmap-to-transformation-the-next-generation-of-government-operations-with-ethical-and-responsible-ai-adoption/

Ethics of Artificial Intelligence | UNESCO, accessed April 27, 2025, https://www.unesco.org/en/artificial-intelligence/recommendation-ethics

Best Practices For Ethical And Responsible AI Governance - Leaders in AI Summit, accessed April 27, 2025, https://www.leadersinaisummit.com/insights/best-practices-for-ethical-and-responsible-ai-governance

Preparing for AI regulations: A quick look at available frameworks - IAPP, accessed April 27, 2025, https://iapp.org/news/a/preparing-for-ai-regulations-a-quick-look-at-available-frameworks

Responsible AI - AI Index, accessed April 27, 2025, https://aiindex.stanford.edu/wp-content/uploads/2024/04/HAI_AI-Index-Report-2024_Chapter3.pdf

Stanford HAI AI Index Report: Responsible AI - AIwire, accessed April 27, 2025, https://www.aiwire.net/2024/04/18/stanford-hai-ai-index-report-responsible-ai/

Cybersecurity Snapshot: Cyber Agencies Offer Secure AI Tips, while Stanford Issues In-Depth AI Trends Analysis, Including of AI Security - Tenable, accessed April 27, 2025, https://www.tenable.com/blog/cybersecurity-snapshot-cyber-agencies-offer-secure-ai-tips-while-stanford-issues-in-depth-ai

The Board Director's Essential 10-Step Guide to Responsible AI Governance - LBZ Advisory, accessed April 27, 2025, https://liatbenzur.com/2024/04/11/board-directors-guide-responsible-ai-governance/

Artificial intelligence in auditing: Enhancing the audit lifecycle - Wolters Kluwer, accessed April 27, 2025, https://www.wolterskluwer.com/en/expert-insights/artificial-intelligence-auditing-enhancing-audit-lifecycle

IEEE Ethically Aligned Design - Palo Alto Networks, accessed April 27, 2025, https://www.paloaltonetworks.com/cyberpedia/ieee-ethically-aligned-design

The ethics of artificial intelligence: Issues and initiatives - European Parliament, accessed April 27, 2025, https://www.europarl.europa.eu/RegData/etudes/STUD/2020/634452/EPRS_STU(2020)634452_EN.pdf

Ethics of Artificial Intelligence and Robotics - Stanford Encyclopedia of Philosophy, accessed April 27, 2025, https://plato.stanford.edu/entries/ethics-ai/

Common ethical dilemmas for lawyers using artificial intelligence - Nationaljurist, accessed April 27, 2025, https://nationaljurist.com/smartlawyer/professional-development/common-ethical-dilemmas-for-lawyers-using-artificial-intelligence/

AI Governance: Best Practices and Importance | Informatica, accessed April 27, 2025, https://www.informatica.com/resources/articles/ai-governance-explained.html.html.html.html.html.html.html.html.html.html.html.html.html.html.html.html.html.html.html

Best practices for responsible AI implementation - Box Blog, accessed April 27, 2025, https://blog.box.com/responsible-ai-implementation-best-practices

AI Governance 101: Understanding the Basics and Best Practices - Zendata, accessed April 27, 2025, https://www.zendata.dev/post/ai-governance-101-understanding-the-basics-and-best-practices

AI Ethics Rely on Governance to Enable Faster AI Adoption | Gartner, accessed April 27, 2025, https://www.gartner.com/en/articles/ai-ethics

Principles for Responsible Use of AI | NCDIT - NC.gov, accessed April 27, 2025, https://it.nc.gov/resources/artificial-intelligence/principles-responsible-use-ai

The Global AI Vibrancy Tool November 2024 - AI Index - Stanford University, accessed April 27, 2025, https://aiindex.stanford.edu/wp-content/uploads/2024/11/Global_AI_Vibrancy_Tool_Paper_November2024.pdf

The Ethical Considerations of Artificial Intelligence | Washington D.C. & Maryland Area, accessed April 27, 2025, https://www.captechu.edu/blog/ethical-considerations-of-artificial-intelligence

AI Governance Failures - Vision.cpa, accessed April 27, 2025, https://www.vision.cpa/blog/ai-governance-failures

The ethical dilemmas of AI | USC Annenberg School for Communication and Journalism, accessed April 27, 2025, https://annenberg.usc.edu/research/center-public-relations/usc-annenberg-relevance-report/ethical-dilemmas-ai

Artificial Intelligence: examples of ethical dilemmas - UNESCO, accessed April 27, 2025, https://www.unesco.org/en/artificial-intelligence/recommendation-ethics/cases